UPDATES: The course code has been updated to the newest version of the AI libraries and the code has been updated to reflect changes in the Vercel AI API.

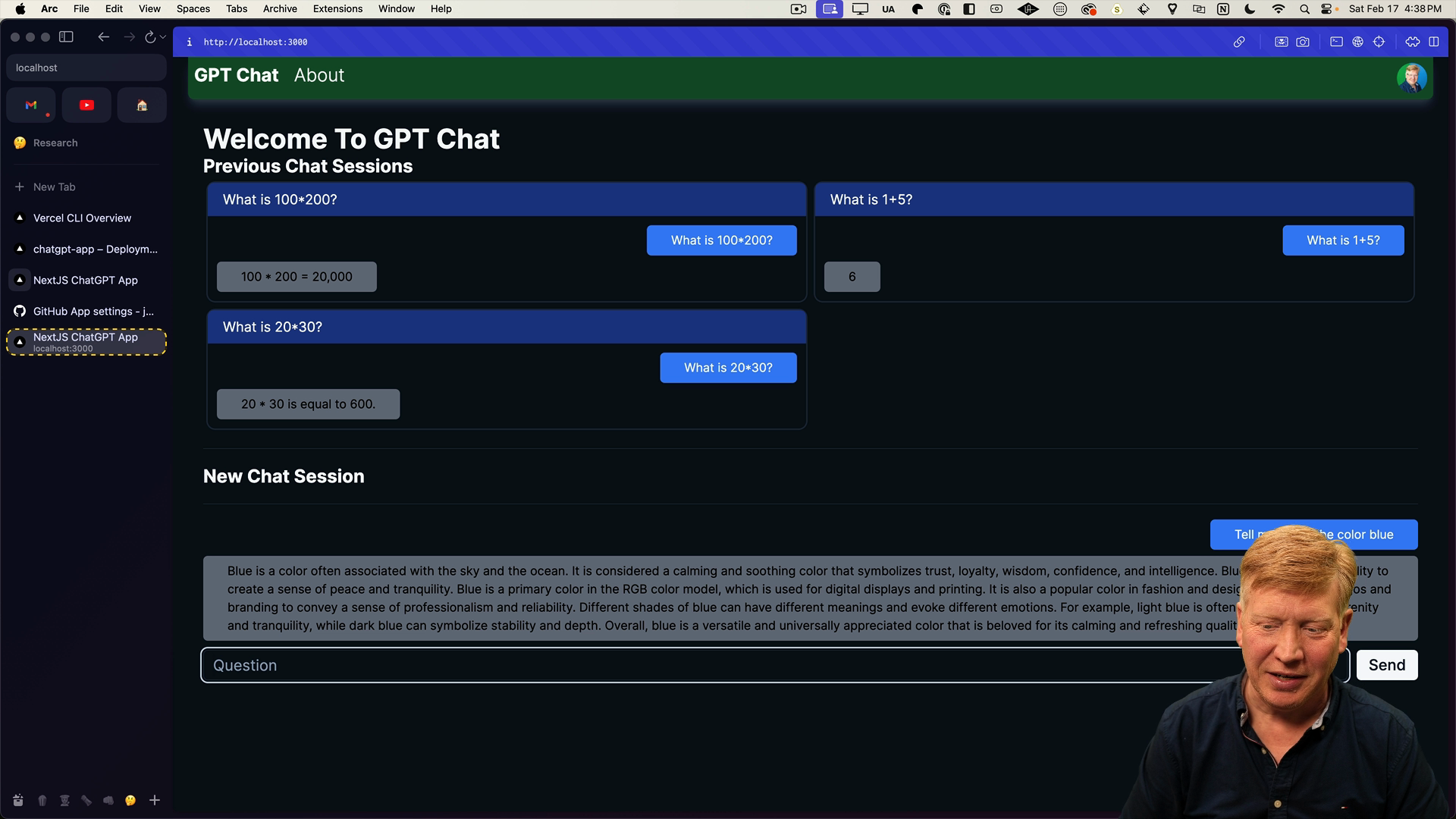

In our current application, we have a list of previous chats displayed on the homepage and a way to communicate with the AI.

However, the AI responses can be quite lengthy, and it takes a while for them to be fully displayed. This is because we are blocking the entire response until it's complete before updating the UI, which is not ideal.

Fortunately, the Vercel ai library that we installed earlier provides a way to implement streaming responses. This means that when you type in a question and receive a response, it comes in parts, similar to how ChatGPT works.

Let's integrate this feature into our application.

Setting up the API Endpoint

To start, we need to create an API endpoint that the Vercel ai library can communicate with.

Inside of the api directory, create a new file named chat/route.ts.

In this code, we import OpenAIStream and StreamingTextResponse from ai, then import and initialize an OpenAI instance with the API key, just like we did in the server action.

Then we define a POST function to handle the incoming requests. The function receives the request object, which is expected to be a JSON request containing the list of messages. We extract the messages using request.json(). For API endpoints you can handle any type of HTTP request, including GET, PUT, DELETE, etc. All you need to do is export a function named after the HTTP verb you want to handle.

Next, we call openai.chat.completions.create() to get the completions, but this time, we set the stream mode to true in the configuration object we pass in. This enables the streaming response.

Finally, we use OpenAIStream and StreamingTextResponse from the Vercel ai library to stream the response back to the client.

Here's the code all together:

import OpenAI from "openai";

import { OpenAIStream, StreamingTextResponse } from "ai";

export const runtime = "edge";

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY!,

});

export async function POST(req: Request) {

const { messages } = await req.json();

const response = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

stream: true,

messages,

});

const stream = OpenAIStream(response);

return new StreamingTextResponse(stream);

}

This code makes it so that as soon as we receive some tokens, we start sending them back to the client without waiting for the entire response to be complete.

Now that we have the API endpoint set up, let's update the Chat component to utilize the streaming response.

Updating the Chat Component

Open the Chat component from app/components/Chat.tsx.

At the top of the file, there are some imports we need to bring in: the useChat hook from @vercel/ai/react and Message from @vercel/ai/types. We'll assert that the type of Message is AIMessage to align with the message type we're expecting from the AI:

import type { Message as AIMessage } from "ai";

import { useChat } from "ai/react";

There's now quite a bit of stuff we can remove from the existing component.

We no longer need the message related state management, since we will be replacing it with the useChat hook. We'll destructure several properties from the all to useChat, and coerce the initialMessages to an array of our AIMessage type:

// inside the component, replace the useState hooks with:

const { messages, input, handleInputChange, handleSubmit, isLoading } =

useChat({

initialMessages: initialMessages as unknown as AIMessage[],

});

Down in the Transcript component, we'll do the same coercion for the messages prop:

<Transcript messages={messages as AIMessage[]} truncate={false} />

Then we'll replace the existing div surrounding the existing Input with a new form. This form element will be managed by the useChat hook, so we'll pass the input and handleInputChange to the Input component and handleSubmit to the form:

<form className="flex mt-3" onSubmit={handleSubmit}>

<Input

className="flex-grow text-xl"

placeholder="Question"

value={input}

onChange={handleInputChange}

autoFocus

/>

<Button type="submit" className="ml-3 text-xl">

Send

</Button>

</form>

We also no longer need the onClick handler, so it can be removed.

With these changes in place, we can check our work in the browser.

Checking Our Work

Over in the browser, refresh the page and ask a new question.

The response should start streaming in as it is generated, rather than waiting for the entire response to be complete before displaying it:

This works great, but we're no longer connected to the database. We need a way to update the chat in the database from the client-side.

Reconnecting the Database

In order to connect the useChat output to the database, we'll create a new server action called updateChat that will update the database every time there is a new message from the client.

To start, create a new server action updateChat.ts inside the server-actions directory. This server action will be similar to what we did in getCompletion, where we either created a new chat or updated an existing chat based on the chatId.

We'll import getServerSession from NextAuth, as well as our createChat and updateChat functions from the database. Then we'll define an updateChat function that takes a chatId and messages as parameters. Inside the function, we first check if a chat with the given chatId exists in the database. If it does, we update the chat by creating new messages. If the chat doesn't exist, we create a new chat with the provided chatId and messages.

Here's the code all together:

"use server";

import { getServerSession } from "next-auth";

import { createChat, updateChat as updateChatMessages } from "@/db";

export const updateChat = async (

chatId: number | null,

messages: {

role: "user" | "assistant";

content: string;

}[]

) => {

const session = await getServerSession();

if (!chatId) {

return await createChat(

session?.user?.email!,

messages[0].content,

messages

);

} else {

await updateChatMessages(chatId, messages);

return chatId;

}

};

With the new server action done, we need to add it to the Chat component.

Updating the Chat Component with the New Server Action

Back in the Chat component, we'll replace getCompletion with the new updateChat server action.

First, import the server action:

import { updateChat } from "@/app/server-actions/updateChat";

We need to call updateChat every time there's a change in the messages, so we'll import the useEffect hook from React.

Add the useEffect hook to the component, with a dependency array of isLoading and messages from useChat, as well as router. The messages represent what will be added to the database, and router is there because otherwise ESLint will get angry.

Inside of the useEffect hook, we check if the chat is not loading and there are messages. If both conditions are met, we map over the messages to only include the role and content properties.

Then, we call the updateChat server action with the current chatId and the simplified messages. If we receive a chatId back, we navigate to the corresponding chat page using router.push and refresh.

useEffect(() => {

(async () => {

if (!isLoading && messages.length) {

const simplifiedMessages = messages.map((message) => ({

role: message.role as "user" | "assistant",

content: message.content,

}));

const newChatId = await updateChat(chatId.current, simplifiedMessages);

if (chatId.current === null) {

router.push(`/chats/${newChatId}`);

router.refresh();

} else {

chatId.current = newChatId;

}

}

})();

}, [isLoading, messages, router]);

With these changes, the chat messages will be updated in the database whenever they change.

Deploying to Production

Now that we have implemented streaming responses and database updates, it's time to push the changes to GitHub which will deploy to Vercel.

The streaming responses feature of Vercel's ai library makes for a better user experience, and the database updates ensure that the chat messages are stored as they come in.