NOTE: OpenAI has a free tier, but you do need to register a credit card with the account even at the free tier. If this is not acceptable to you the ai library can integrate with a number of AI providers as well as local only AI solutions like ollama. (If you choose the local route then the AI will not work in production.)

Let's add the interactive ChatPT functionality to our app. We want it so that users can input questions and get responses from an AI. We'll need two things for this: an input control, and a way to connect to OpenAI.

Installing Dependencies

The first thing to do is add the input control from shadcn. The command is similar to what we've used before:

npx shadcn-ui@latest add input

Next, we need to install the libraries for connecting to OpenAI. For this, we need the OpenAI library and the ai package from by Vercel:

pnpm add ai openai

The Plan

The idea for the app goes something like this:

In the UI will be a an input field, and a submit button. When the user submits a query, the app will send a request to the server. The server will then return some responses which we can display on the client-side.

One of the easiest ways to do this in Next.js is to use a Server Action.

Server Actions are special functions you define that are specifically run on the server. Whenever you call a server function from a client component, Next.js handles fetch and data retrieval from the server.

Setting Up a Server Action

Let's set up a server action for connecting to OpenAI.

First, create a directory in your app for server actions, along with a getCompletions.ts file at src/server-actions/getCompletion.ts. The file is named this way because a "completion" is what it's called in AI land when you chat with a bot.

There are two different ways to define a server action:

Adding "use server"; at the top of the file indicates that every function inside the module is a server action. Alternatively, adding "use server"; inside the definition of a function indicates that the function is a server action.

For the getCompletion file, we'll put use server at the top of the file. Next we'll need to create an OPENAI_API_KEY environment variable, which we'll use to initialize the OpenAI library:

// inside server-actions/getCompletion.ts

"use server";

import OpenAI from "openai";

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

});

When storing the environment variable, you can put it in either .env.local or .env.development.local.

Next, we'll define the getCompletion function. It will take in a messageHistory that is an array of objects that act as a transcript of the messages between the user and the AI assistant. This is nice because it gives the AI some history of the conversation so far:

// inside server-actions/getCompletion.ts

export async function getCompletion(

messageHistory: {

role: "user" | "assistant";

content: string;

}[]

) {

// function implementation will be here

}

The function will send the request off to the server using the gpt-3.5-turbo model along with the messageHistory. There are several models to choose from, but this is the fastest and cheapest:

// inside the getCompletion function

const response = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: messageHistory,

});

Once we get the response, we concatenate it with the old message history and return an object with the messages:

const messages = [

...messageHistory,

response.choices[0].message as unknown as {

role: "user" | "assistant";

content: string;

},

];

return { messages };

We'll do more stuff with the messages as we continue building the application, but for now we'll move on to building the chat component.

Building the Chat Component

Create a new file at components/Chat.tsx. Obviously, this will be a client component so we'll add "use client"; at the top of the file. Since the component will need state, we'll import the useState Hook from React. We'll also import the input and button from shadcn:

// inside components/Chat.tsx

"use client";

import { useState } from "react";

import { Input } from "@/components/ui/input";

import { Button } from "@/components/ui/button";

The state of this component will consist of messages which will have a role and content, and a transcript of the messages so far, as well as the current message being typed. We'll create a Message interface that matches that shape:

interface Message {

role: "user" | "assistant";

content: string;

}

The Chat component will be the default export for the file. It will have a state for the message history and the current message being typed:

export default function Chat() {

const [messages, setMessages] = useState<Message[]>([]);

const [message, setMessage] = useState("");

}

Next, we'll add some markup for the actual chat UI.

We'll format it so that AI stuff is on the left and user stuff is on the right:

// inside the Chat component return

return (

<div className="flex flex-col">

{messages.map((message, i) => (

<div

key={i}

className={`mb-5 flex flex-col ${

message.role === "user" ? "items-end" : "items-start"

}`}

>

<div

className={`${

message.role === "user" ? "bg-blue-500" : "bg-gray-500 text-black"

} rounded-md py-2 px-8`}

>

{message.content}

</div>

</div>

))}

Below this, we'll add in the Input control. Its value will be the current message, and its onChange will call the setMessage function with the new value. We'll also add an onKeyUp handler that will call the onClick function when the user presses the Enter key:

<div className="flex border-t-2 border-t-gray-500 pt-3 mt-3">

<Input

className="flex-grow text-xl"

placeholder="Question"

value={message}

onChange={(e) => setMessage(e.target.value)}

onKeyUp={(e) => {

if (e.key === "Enter") {

onClick();

}

}}

/>

</div>

Next we'll import the getCompletion server action and create the onClick handler.

The onClick handler is an async function that will call getCompletion, await the result, and give the current messages and the new message as input. When the response comes back, it empties the current message and sets the list of messages to whatever came back from the AI:

// at the top of the file

import { getCompletion } from "@/app/server-actions/getCompletion";

// inside the Chat component above the return

const onClick = async () => {

const completions = await getCompletion([

...messages,

{

role: "user",

content: message,

},

]);

setMessage("");

setMessages(completions.messages);

};

Finally, we'll add the Button component for sending the message below the Input, with its onClick set to the onClick handler:

<Button onClick={onClick} className="ml-3 text-xl">

Send

</Button>

Adding the Chat Component to the App

Now that we've written the Chat component, we need to add it to our app:

Inside of app/page.tsx, import the component and add it to the page below the h1:

import Chat from "@/components/Chat";

export default function Home() {

return (

<main>

<h1 className="text-4xl font-bold">Welcome to GPT Chat</h1>

<Chat />

</main>

);

}

At this point, we can test our application.

Testing Our App

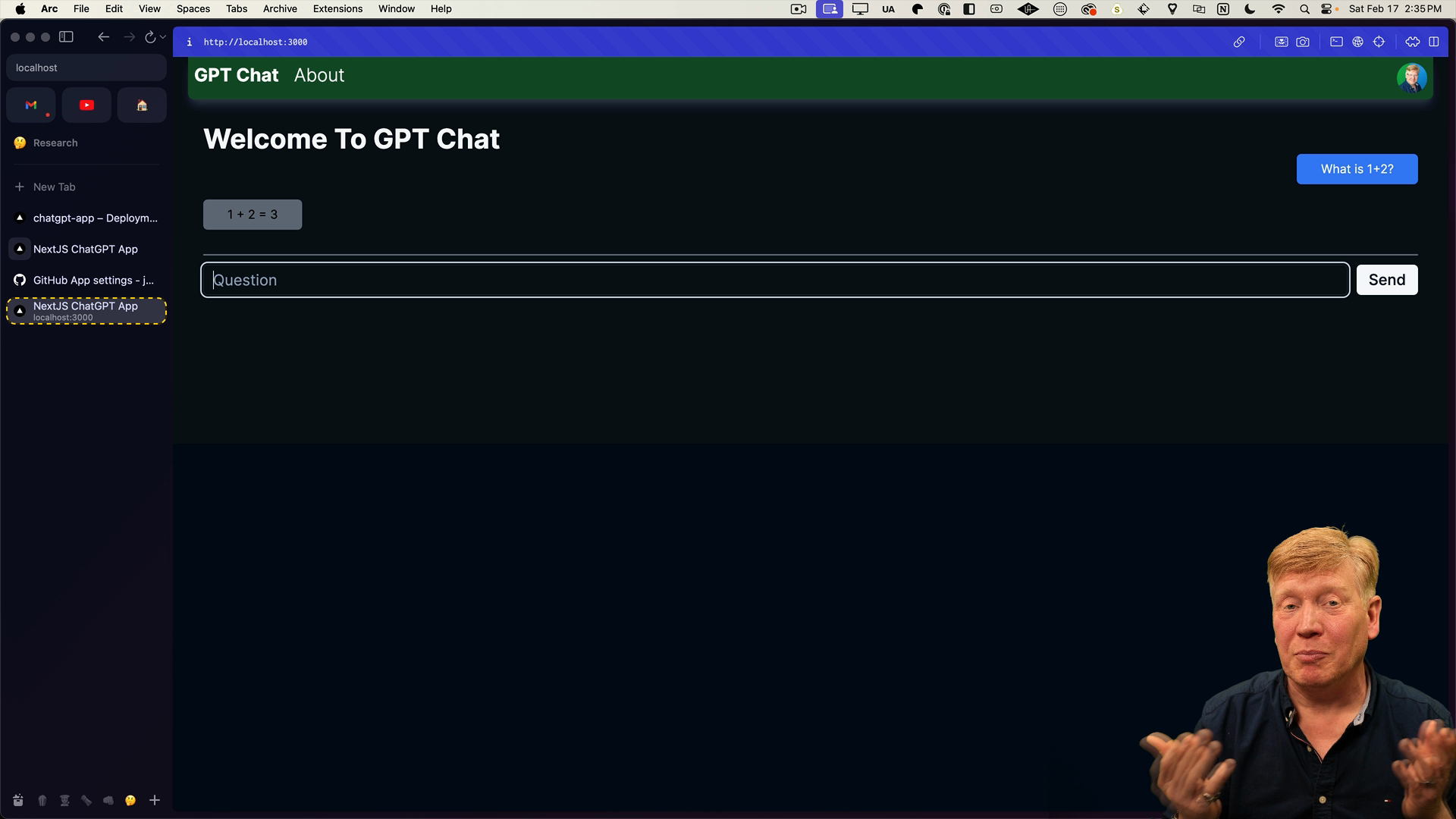

Back in the browser, we should see the input control and the send button. When we type in a question and press the send button, we should get a response back from the AI:

In this case, the AI answers our question that 1 + 2 = 3, so the app is working!

What's happening is that we are calling the getCompletions server action automatically from the client. It's doing a fetch, getting the data back, populating the messages array, and then being formatted for the UI.

It's working great, but there are some improvements we can make.

Make the UI More Visually Pleasing

In order to make the UI a bit more visually pleasing, we'll bring in the Separator component from shadcn First install the component in the terminal:

pnpm add @shadcn-ui/separator

Then we'll add it between the Chat component and the heading on the homepage:

<main>

<h1 className="text-4xl font-bold">Welcome to GPT Chat</h1>

<Separator className="my-5"/>

<Chat />

</main>

The UI looks better now, but more importantly we need to make sure that the chat functionality only shows if the user is logged in.

Require Authentication

The Home component inside of page.tsx is an RSC (React Server Component), because we didn't specify "use client"; at the top of the file. This means that we can't use useSession.

Instead, we need to use getServerSession from NextAuth:

import { getServerSession } from "next-auth";

Because getServerSession is async, we need to update the Home component to be an async function. Inside of the component, we'll create a new session variable that we'll get by awaiting the result of getServerSession:

export default async function Home() {

const session = await getServerSession();

...

We'll then add a conditional to check if the user is logged in by looking at session?.user?.email. If they are, we'll show the Chat component. If they're not, we'll show a message telling them to log in:

export default async function Home() {

const session = await getServerSession();

return (

<main className="p-5">

<h1 className="text-4xl font-bold">Welcome To GPT Chat</h1>

{!session?.user?.email && <div>You need to log in to use this chat.</div>}

{session?.user?.email && (

<>

<Separator className="my-5" />

<Chat />

</>

)}

</main>

);

}

Refreshing the browser, we should be able to log in and see the chat functionality. If we log out, the chat functionality should disappear.

Deployment and Next Steps

Before you push to deployment, make sure to add the OPENAI_API_KEY environment variable to your production environment on Vercel.

Then you can push your changes to production by adding the new files and committing and pushing your changes in git:

git add -A && git commit -m "Interactivity" && git push -u origin main

Upon successful deployment, your app should be able to communicate effectively with OpenAI and return accurate responses to user queries.

Now that we have our application talking to OpenAI and getting all this done, we would want to save our conversations. So, for our next step, we're going to learn how to use a database, both locally and in production.